In today's AI-driven world, businesses rely on large language models (LLMs) for insights. But there's a fundamental issue—many AI solutions extract knowledge from the web or the model trained data instead of using an organization's own specific data. When it comes to real-time decision-making, we need a better approach: direct integration with stored telemetry data. This ensures accuracy and consistency without depending on external sources.

Our solution? An AI-driven AI Assistant that directly connects to internal data sources while maintaining strict separation from public knowledge bases. In this blog, we'll explore the core architecture, key components, and why this approach is critical for organizations that demand precise, real-time insights from their own data.

The key requirement for an effective AI-powered data system is ensuring insights come exclusively from internal data, not from scraping or pre-trained knowledge. This presents several challenges:

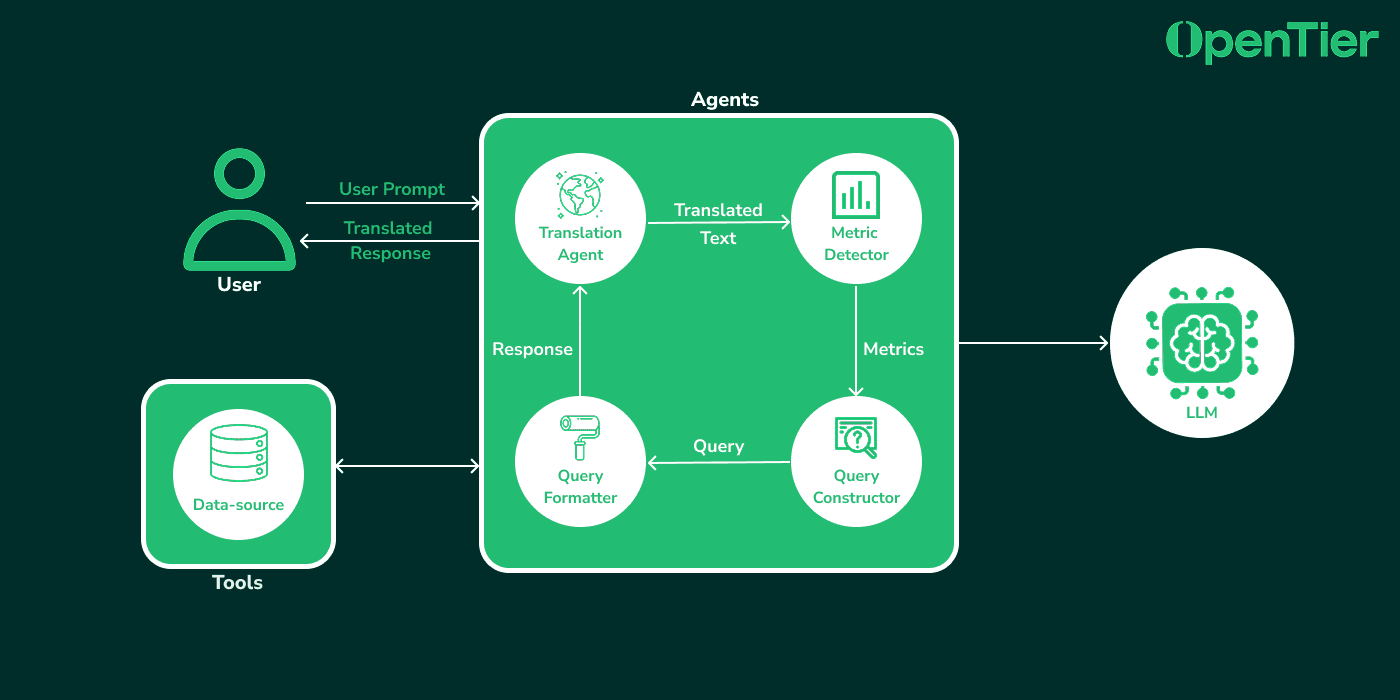

Our AI Assistant follows a structured architecture designed to process queries efficiently and provide real-time insights.

1. Data Gateway Layer

2. AI Processing Layer

The flow to process the queries and retrieve real-time data:

1. User Prompt → First the user submits a query, asking for insights from internal data or asking questions on relevant topics.

2. AI Agents → The agent interprets the query, determines if it needs translation to English, and assesses whether it requests data. If data is needed, it decides the best approach to retrieve relevant information. Otherwise, it relies on the LLM’s built-in knowledge to provide an appropriate response.

3. Response Formatting → The AI Agent receives the raw data, and then processes it and structures it into meaningful response, and then returns it to the user in the original prompt language.

1. Translation Agent

2. Metric Detector

3. Query Constructor

4. Query Formatter

5. Prepare for Translation

6. Translation Agent (Second Pass)

The system message provides initial instructions to guide the AI agent’s behavior. It ensures that the agent:

To illustrate how this system work, here’s an interaction between a user and the AI AI Assistant.

In this screenshot, the user asks for telemetry data insights, and the AI Agent retrieves real-time data from the internal source, the response is structured and context-aware, ensuring accuracy without external dependencies.

As we mentioned earlier, there are strict instructions on the AI Assistant to respond with accurate and relevant text, so let’s try asking for a data that doesn’t have a record in the data source:

As shown, the user asks about telemetry data which isn’t presented in the data source, and the agent replies with No records found as it didn’t find the data that the user mentioned.

The AI Assistant isn’t limited to just query your data from the database, It can also answer your questions if it’s in the related context.

But, If a user, feeling a bit hungry, asks the AI Assistant for something unrelated to telemetry data—such as a Koshary recipe—would the AI Assistant still respond appropriately despite the change in context?

This demonstrates that the AI Assistant won’t answer any irrelevant questions, maintaining its focus on the intended context.

To ensure accessibility, the AI Assistant supports multiple languages (English, Arabic and German) providing insights without losing accuracy.

German:

Arabic (With Dialect support):

To build modern and interactive AI-powered UIs, frameworks like Next.js and Tailwind CSS are highly effective.

For AI development, using LangChain or Ollama provides powerful capabilities for building intelligent applications.

For backend development, frameworks like FastAPI and Python ensure efficient API handling and scalability. InfluxDB serves as a great choice for time-series data storage and analytics.

The model is capable of using different models like OpenAI ChatGPT, DeepSeek and Llama, providing flexibility.

By ensuring that insights come directly from internal telemetry data, organizations can trust their AI-driven decisions without the risks of external misinformation. Our AI-powered AI Assistant guarantees:

✅ Accurate, real-time insights from internal sources

✅ Strict separation from public knowledge bases

✅ LLM-agnostic and future-proof architecture

✅ Consistent, context-aware responses

✅ Multi-language support for global accessibility

As businesses continue to rely on AI for critical decision-making, it's essential to establish a system that prioritizes data integrity and relevance. With this structured approach, organizations can confidently extract insights without the pitfalls of scraping or external dependencies.

Liked this article? Sign in, give it a 💗 and share it on social media